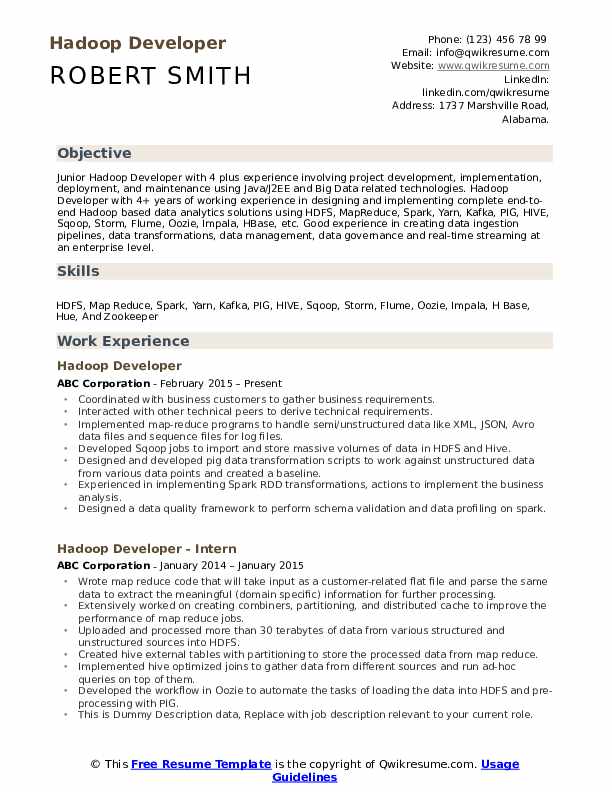

Hadoop Developer Resume

Headline : Results-driven Hadoop Developer with 7 years of experience in designing, implementing, and optimizing big data solutions using Hadoop ecosystem tools. Proven expertise in data ingestion, transformation, and real-time analytics.

Skills : HBase, Hue, Hadoop Ecosystem, MapReduce

Description :

- Collaborated with stakeholders to gather and analyze business requirements for Hadoop solutions.

- Worked with cross-functional teams to define technical specifications and project scope.

- Implemented MapReduce programs to process semi-structured data formats like XML and JSON.

- Developed Sqoop jobs to efficiently import large datasets into HDFS and Hive.

- Created Pig scripts for data transformation, enabling analysis of unstructured data sources.

- Utilized Spark for RDD transformations, enhancing data processing capabilities for analytics.

- Designed a data quality framework for schema validation and profiling using Spark.

Experience

5-7 Years

Level

Executive

Education

MSc CS

Hadoop Developer Temp Resume

Objective : Results-driven Hadoop Developer with 5 years of experience in designing, implementing, and optimizing Hadoop ecosystems. Proficient in HDFS, MapReduce, Hive, and Spark, delivering scalable solutions for big data processing.

Skills : Hadoop Ecosystem, MapReduce Programming, Data Ingestion, Hive Query Language, Spark Framework

Description :

- Designed and migrated existing MSBI systems to Hadoop, enhancing data processing capabilities.

- Developed a batch processing framework for data ingestion into HDFS, Hive, and HBase.

- Conducted systems analysis, design, and development, contributing as a key individual on complex projects.

- Resolved client issues by providing effective solutions and recommendations.

- Guided junior developers and coordinated tasks on medium to large-scale projects.

- Collaborated with development and operations teams for integrated testing on various projects.

- Prepared test data and executed detailed test plans, ensuring quality and performance.

Experience

2-5 Years

Level

Junior

Education

MSc CS

Bigdata/Hadoop Developer Resume

Objective : Detail-oriented Hadoop Developer with 2 years of experience in building and optimizing data pipelines using Hadoop ecosystem tools. Proficient in HDFS, MapReduce, and Hive for efficient big data processing and analytics.

Skills : Cassandra Database, Parquet File Format, Avro Data Serialization, HDFS Management, MapReduce Programming

Description :

- Developed data pipelines using Flume, Sqoop, and PIG to extract and store data in HDFS.

- Loaded tables from reference databases into HDFS using Sqoop, ensuring data integrity.

- Designed and implemented ETL workflows in Java for processing data in HDFS and HBase.

- Managed log file transfers from various sources to HDFS using Flume for further analysis.

- Transformed structured and unstructured data from databases into HDFS using Sqoop.

- Created Sqoop scripts for data import/export, handling incremental loads effectively.

- Developed MapReduce programs in Java for comprehensive data analysis across formats.

Experience

0-2 Years

Level

Entry Level

Education

BSc CS

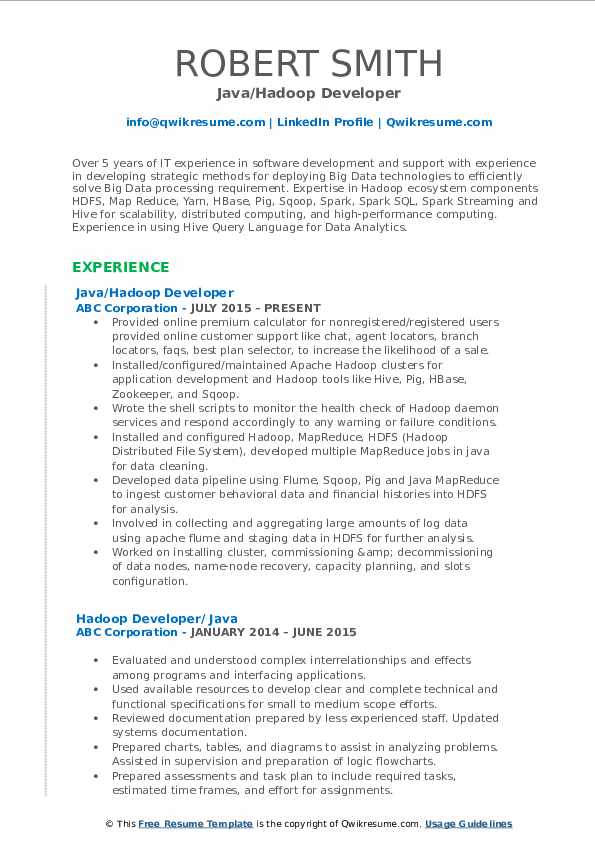

Java/Hadoop Developer Resume

Headline : Results-driven Hadoop Developer with 7 years of experience in Big Data technologies. Proficient in HDFS, MapReduce, Spark, and Hive, delivering scalable data solutions and optimizing data processing workflows for enhanced analytics.

Skills : Hadoop Ecosystem, Data Ingestion, Spark Streaming, Data Pipeline Development, Hive Query Language

Description :

- Developed and maintained scalable data pipelines using Hadoop ecosystem tools like Flume, Sqoop, and Pig for efficient data ingestion.

- Wrote shell scripts to automate health checks of Hadoop services, ensuring high availability and performance.

- Designed and implemented MapReduce jobs to process large datasets, improving data analysis efficiency.

- Managed HDFS storage, including data replication and recovery strategies to ensure data integrity.

- Collaborated with data scientists to optimize data models and improve query performance using Hive and Spark SQL.

- Conducted performance tuning of Hadoop jobs, achieving significant reductions in processing time.

- Participated in the installation and configuration of Hadoop clusters, ensuring optimal resource allocation and management.

Experience

5-7 Years

Level

Executive

Education

MSc CS

Senior ETL And Hadoop Developer Resume

Headline : Results-driven Hadoop Developer with 7 years of experience in big data technologies. Proficient in Hadoop ecosystem tools, data processing, and optimization. Skilled in ETL processes, data modeling, and performance tuning for large datasets.

Skills : Hadoop Ecosystem, Impala SQL, Ambari Administration, Hue Interface, Apache Storm

Description :

- Analyzed incoming data processing through programmed jobs, delivering outputs for cross-team access and analysis.

- Utilized Cloudera Manager to oversee Hadoop operations and ensure system reliability.

- Collaborated with stakeholders on data mapping and modeling to align with business needs.

- Documented architectural best practices for building scalable systems on AWS.

- Employed Pig as an ETL tool for data transformations and pre-aggregations before HDFS storage.

- Configured and launched Hadoop tools on AWS, optimizing component integration.

- Integrated logs from physical machines into HDFS using Flume for enhanced data accessibility.

Experience

5-7 Years

Level

Executive

Education

MSc CS

Bigdata/Hadoop Developer Resume

Headline : Results-driven Hadoop Developer with 7 years of experience in designing and implementing big data solutions. Proficient in Hadoop ecosystem, data processing, and analytics, leveraging tools like HDFS, MapReduce, Hive, and Spark to optimize data workflows.

Skills : Apache Oozie, Cassandra Database, Hadoop Ecosystem, MapReduce Programming

Description :

- Installed and configured Hadoop components, developing MapReduce jobs for data cleaning and preprocessing.

- Configured and utilized Hadoop ecosystem tools, enhancing data processing efficiency.

- Executed data import/export tasks using Sqoop for seamless integration with HDFS and Hive.

- Designed ADF workflows for scheduling data copy and executing Hive scripts, improving data management.

- Created dispatch jobs for loading data into Teradata, integrating big data analytics with Hadoop and Spark.

- Contributed to the implementation of Cloudera Hadoop environments, ensuring optimal performance.

- Worked with diverse data sources, including MongoDB and Oracle, to support data integration efforts.

Experience

5-7 Years

Level

Executive

Education

M.S. CS

Java/Hadoop Developer Resume

Objective : Results-driven Hadoop Developer with 5 years of experience in designing and implementing big data solutions. Proficient in Hadoop ecosystem tools, data processing, and ETL workflows to drive business insights and optimize data management.

Skills : Visual Studio, Hadoop HDFS, MapReduce, Spark SQL, HiveQL

Description :

- Collaborated with engineering teams to design and implement data flow solutions using Hadoop, Hive, and Java, addressing complex business requirements.

- Developed and optimized MapReduce jobs on large-scale Hadoop clusters, processing billions of records daily for timely reporting.

- Worked closely with QA and Operations teams to support ETL platforms and ensure seamless data integration across systems.

- Executed data extraction, transformation, and loading from diverse sources into data warehouses, enhancing data availability.

- Created insightful metrics and dashboards to support business intelligence initiatives and decision-making.

- Mentored junior engineers, providing training and conducting code reviews to ensure best practices in data application development.

- Implemented proof of concepts (POCs) using Hadoop, Yarn, and Python, demonstrating innovative data processing solutions.

Experience

2-5 Years

Level

Executive

Education

M.S. CS

Hadoop Developer Resume

Headline : Results-driven Hadoop Developer with 7 years of experience in Big Data technologies, specializing in Hadoop ecosystem tools like Hive, Pig, and Spark. Proven track record in data processing, ETL, and analytics to drive business insights.

Skills : Oozie Workflows, Kafka Messaging, Cassandra Database, Parquet File Format, Avro Data Serialization

Description :

- Installed and configured Apache Hadoop clusters, utilizing YARN for resource management and tools like Hive, Pig, and Spark for data processing.

- Utilized Sqoop for efficient data transfer between relational databases and HDFS, and Flume for real-time log data ingestion.

- Developed and optimized MapReduce programs for data cleansing and transformation, ensuring data quality for analysis.

- Scheduled and managed incremental data loads into staging tables, enhancing data availability for analytics.

- Implemented Kafka as a messaging system to facilitate real-time data ingestion from various sources into HDFS.

- Executed data transformations using Pig scripts, performing joins and aggregations before storing results in HDFS.

- Created and managed Hive external tables with partitioning strategies to optimize query performance on large datasets.

Experience

5-7 Years

Level

Executive

Education

MSc CS

Big Data/Hadoop Developer Resume

Objective : Results-driven Hadoop Developer with 5 years of experience in designing, implementing, and optimizing big data solutions. Proficient in Hadoop ecosystem tools, data processing, and analytics to drive business insights and efficiency.

Skills : Hadoop Ecosystem, Oozie Workflow Scheduler, Zookeeper Coordination, Apache Storm Streaming, Scala Programming

Description :

- Collaborated with business users to gather requirements and enhance data processing workflows.

- Loaded and transformed large datasets from HDFS using Sqoop for further analysis.

- Executed Hadoop jobs to process millions of records efficiently.

- Transformed legacy data into HDFS and HBase using Sqoop for better accessibility.

- Developed MapReduce programs to analyze and extract insights from large datasets.

- Utilized Hive queries and Pig scripts to identify data patterns and trends.

- Monitored and optimized Hadoop scripts for efficient data loading into Hive.

Experience

2-5 Years

Level

Junior

Education

M.S. CS

Hadoop Developer Resume

Summary : Results-driven Hadoop Developer with 10 years of experience in designing and implementing scalable data solutions. Proficient in data ingestion, processing, and optimization using Hadoop ecosystem tools, ensuring high performance and reliability.

Skills : Apache Oozie, Java Development, Shell Scripting, Hadoop Ecosystem, Apache Spark

Description :

- Enhanced performance by optimizing Hadoop sub-projects, migrating data using Sqoop, and conducting regular backups.

- Integrated diverse data sources, including RDBMS and flat files, into Hadoop.

- Designed and built scalable distributed data solutions leveraging Hadoop technologies.

- Analyzed business requirements to develop and design effective data processing algorithms.

- Executed delta processing and incremental updates using Hive, ensuring data integrity.

- Optimized MapReduce and Hive scripts for improved scalability and performance.

- Created and managed Hive tables, ensuring efficient data loading and querying.

Experience

7-10 Years

Level

Senior

Education

MSc CS

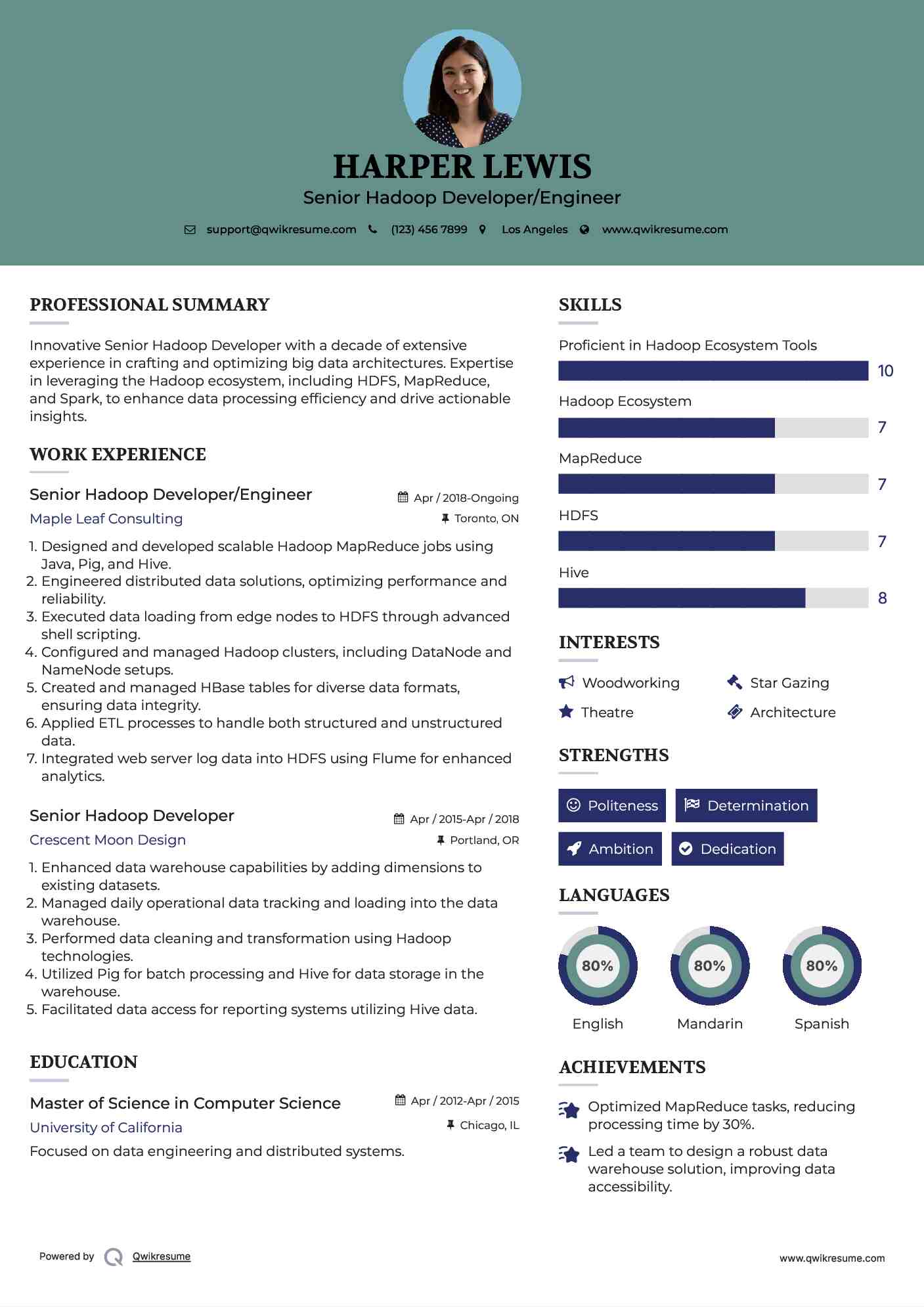

Senior Hadoop Developer/Engineer Resume

Summary : Innovative Senior Hadoop Developer with a decade of extensive experience in crafting and optimizing big data architectures. Expertise in leveraging the Hadoop ecosystem, including HDFS, MapReduce, and Spark, to enhance data processing efficiency and drive actionable insights.

Skills : Proficient in Hadoop Ecosystem Tools, Hadoop Ecosystem, MapReduce, HDFS, Hive

Description :

- Designed and developed scalable Hadoop MapReduce jobs using Java, Pig, and Hive.

- Engineered distributed data solutions, optimizing performance and reliability.

- Executed data loading from edge nodes to HDFS through advanced shell scripting.

- Configured and managed Hadoop clusters, including DataNode and NameNode setups.

- Created and managed HBase tables for diverse data formats, ensuring data integrity.

- Applied ETL processes to handle both structured and unstructured data.

- Integrated web server log data into HDFS using Flume for enhanced analytics.

Experience

7-10 Years

Level

Management

Education

M.S.

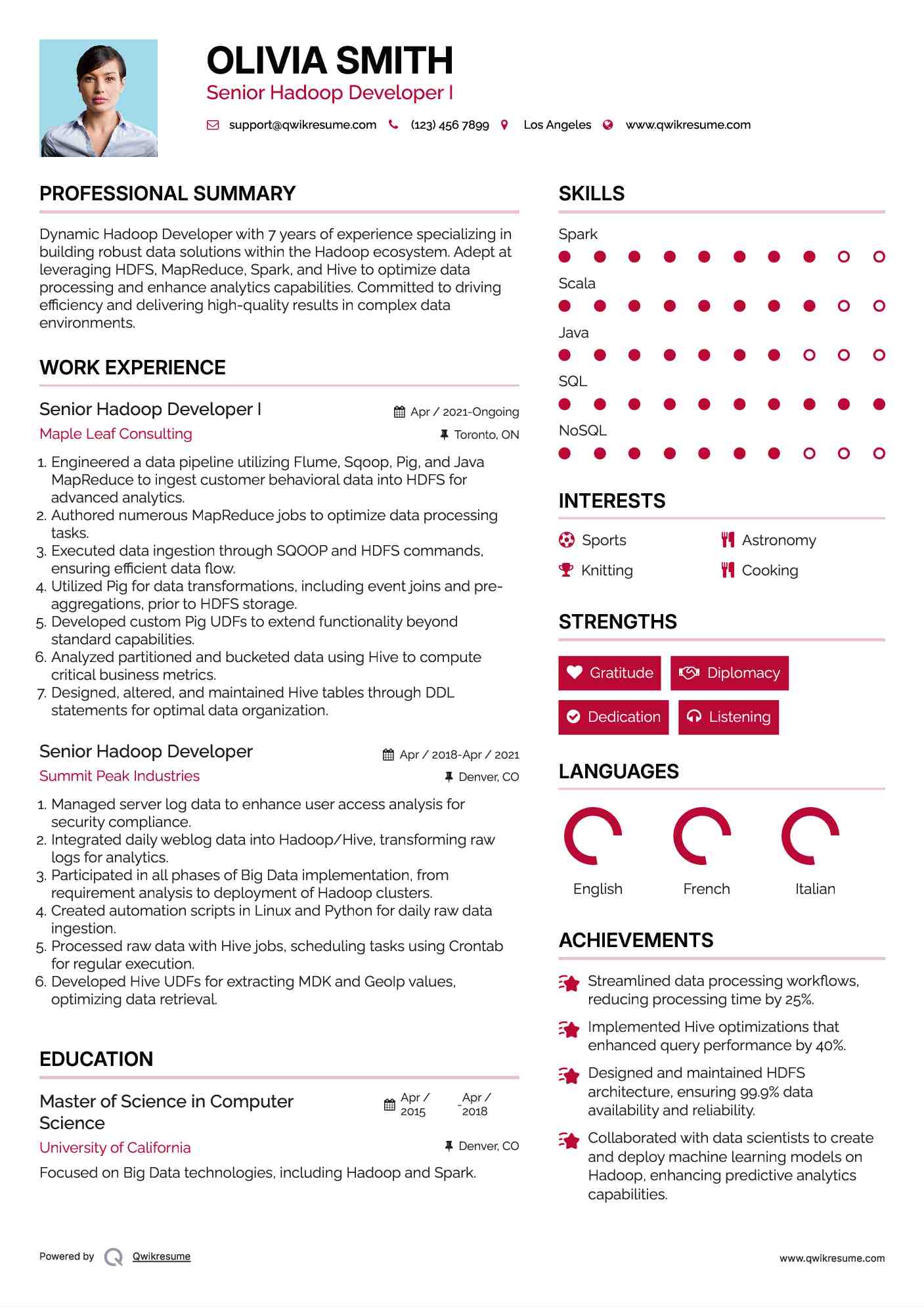

Senior Hadoop Developer I Resume

Headline : Dynamic Hadoop Developer with 7 years of experience specializing in building robust data solutions within the Hadoop ecosystem. Adept at leveraging HDFS, MapReduce, Spark, and Hive to optimize data processing and enhance analytics capabilities. Committed to driving efficiency and delivering high-quality results in complex data environments.

Skills : Spark, Scala, Java, SQL, NoSQL

Description :

- Engineered a data pipeline utilizing Flume, Sqoop, Pig, and Java MapReduce to ingest customer behavioral data into HDFS for advanced analytics.

- Authored numerous MapReduce jobs to optimize data processing tasks.

- Executed data ingestion through SQOOP and HDFS commands, ensuring efficient data flow.

- Utilized Pig for data transformations, including event joins and pre-aggregations, prior to HDFS storage.

- Developed custom Pig UDFs to extend functionality beyond standard capabilities.

- Analyzed partitioned and bucketed data using Hive to compute critical business metrics.

- Designed, altered, and maintained Hive tables through DDL statements for optimal data organization.

Experience

5-7 Years

Level

Executive

Education

MSCS

Senior Hadoop Developer Resume

Summary : Accomplished Senior Hadoop Developer with a decade of experience in architecting and optimizing big data solutions. Expert in leveraging the Hadoop ecosystem, including HDFS, Spark, and Hive, to drive data ingestion, processing, and analytics, ensuring high performance and scalability. Committed to delivering innovative solutions that meet complex business needs and enhance data-driven decision-making.

Skills : Proficient in HQL, Expertise in Hadoop Ecosystem, ETL Processes, Data Modeling, Data Analysis

Description :

- Configured and implemented a comprehensive Big Data stack for multiple projects.

- Led a team of developers, ensuring timely delivery of high-quality solutions.

- Managed project timelines and deliverables while overseeing technical accuracy.

- Conducted code reviews to uphold coding standards and best practices.

- Collaborated with architects and business leaders to design applications using Hadoop, Spark, and other technologies.

- Provided technical insights to the QA team for effective test development.

- Streamlined workflows for data processing and analytics, enhancing overall system performance.

Experience

10+ Years

Level

Senior

Education

M.S.